“ESXi Packet Loss Troubleshooting with iPerf3 and pktcap-uw”

- Part 1: Baseline Testing and Setup

- Part 2: Capturing UDP Traffic Under Different CPU Loads

- Part 3: Analyzing Packet Loss with Wireshark

- Part 4: UDP Loss from Link Flapping and Network Instability

- Part 5: Inter-VLAN UDP Loss Caused by Bandwidth Limits ← You are here

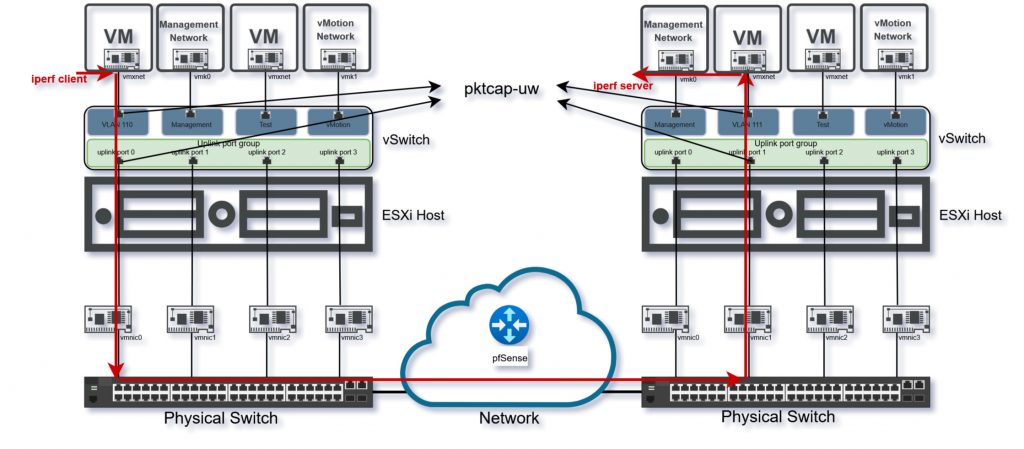

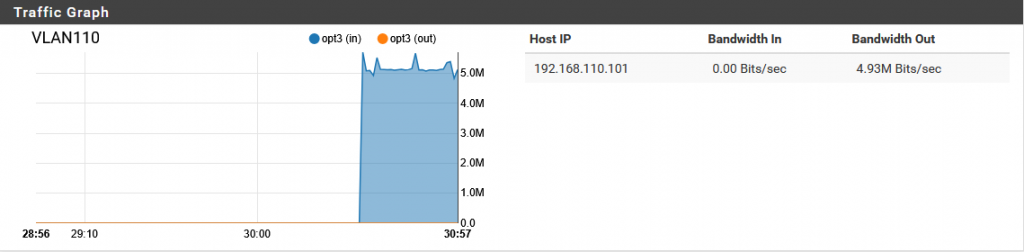

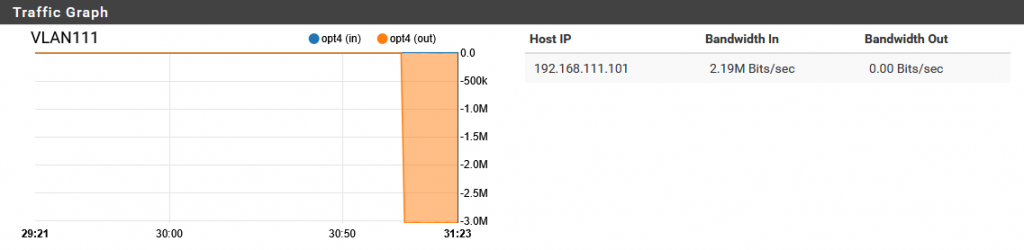

This scenario explores a more complex network configuration by placing the receiving VM on a separate VLAN. With pfSense performing the inter-VLAN routing and a bandwidth limiter configured at 3 Mbps, the goal is to observe how constrained routing paths affect UDP traffic, especially when the transmission rate exceeds available capacity.

Lab Setup

Sending VM: 192.168.110.101 (VLAN 110)

- Receiving VM: 192.168.111.101 (VLAN 111)

- Routing: pfSense firewall performs inter-VLAN routing

- Bandwidth Limit: pfSense limiter set to 3 Mbps

- Traffic Test: iPerf3 – UDP – 5 Mbps test rate

Scenario 3: Inter-VLAN Packet Loss Due to Bandwidth Constraint

Goal: Evaluate whether a bandwidth limit enforced by a router between VLANs can lead to packet loss when traffic exceeds the configured cap.

Method:

- The receiving VM is placed on VLAN 111, with inter-VLAN routing handled by pfSense.

- A 3 Mbps limiter is configured on the pfSense firewall for traffic between VLAN 110 and VLAN 111.

- The sending VM transmits 5 Mbps of UDP traffic using iPerf3.

Expected Outcome:

- Packet loss is expected as the sending rate exceeds the bandwidth cap.

- Jitter may also increase due to buffering and queue drops.

Step one: Generate UDP Traffic with iPerf3

Start iPerf3 server and client on Windows VMs to generate UDP traffic:

iperf3 -s -B 192.168.110.103 -p 10101 --> Server

iperf3 -c 192.168.110.103 -u -b 5M -l 1400 -t 100 -p 10101 --> ClientStep Two: Start pktcap-uw Packet Capture

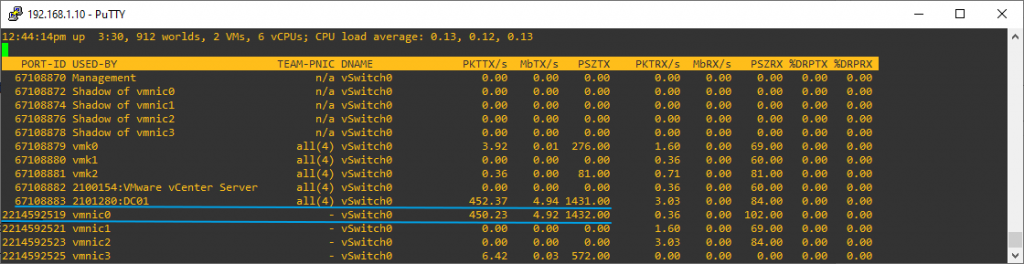

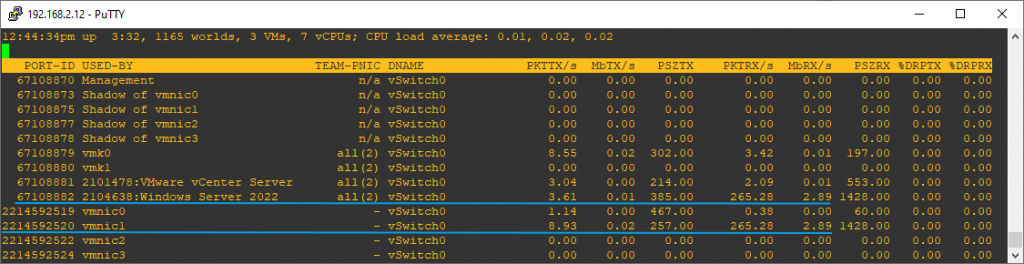

After moving the receiving VM to VLAN 111, it obtained a new IP address, and due to load balancing, traffic from the sender now flows through vmnic0, while the receiver uses vmnic1.

We start the pktcap-uw capture commands on sending, respective receiving VM.

pktcap-uw --switchport 67108883 --capture VnicTx --dstip 192.168.111.101 --proto 0x11 -o /vmfs/volumes/Datastore/traces/esxi0.switchport.67108883.pcapng & pktcap-uw --uplink vmnic0 --capture UplinkSndKernel --dstip 192.168.111.101 --proto 0x11 -p 10101 -o /vmfs/volumes/Datastore/traces/esxi0.uplink.vmnic0.pcapng &pktcap-uw --switchport 67108882 --capture VnicRx --srcip 192.168.110.101 --proto 0x11 -o /vmfs/volumes/DatastoreESXi2/traces/esxi2.switchport.67108882.pcapng & pktcap-uw --uplink vmnic1 --srcip 192.168.110.101 --proto 0x11 -p 10101 -o /vmfs/volumes/DatastoreESXi2/traces/esxi2.uplink.vmnic1.pcapngStep Three: Analyze iPerf3 Results

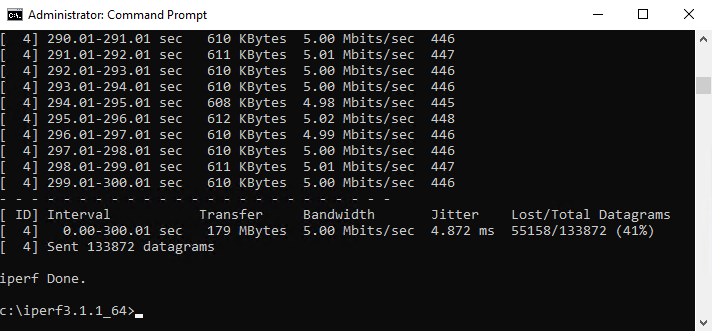

- iPerf3 output shows consistent packet loss (~41% depending on pfSense queue behavior)

- Jitter is also higher due to buffering and periodic queue drops on pfSense

- CPU load on both VMs remains normal throughout the test

Step Four: Analyze pktcap-uw Captures with Wireshark

Capture locations:

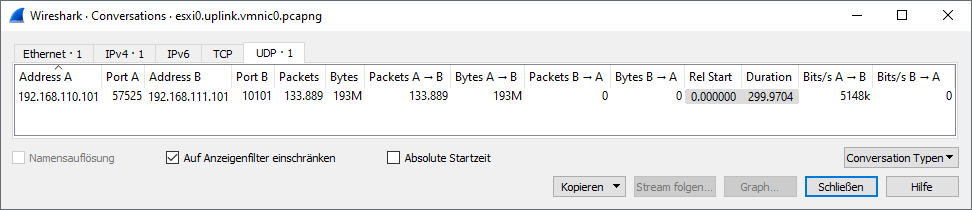

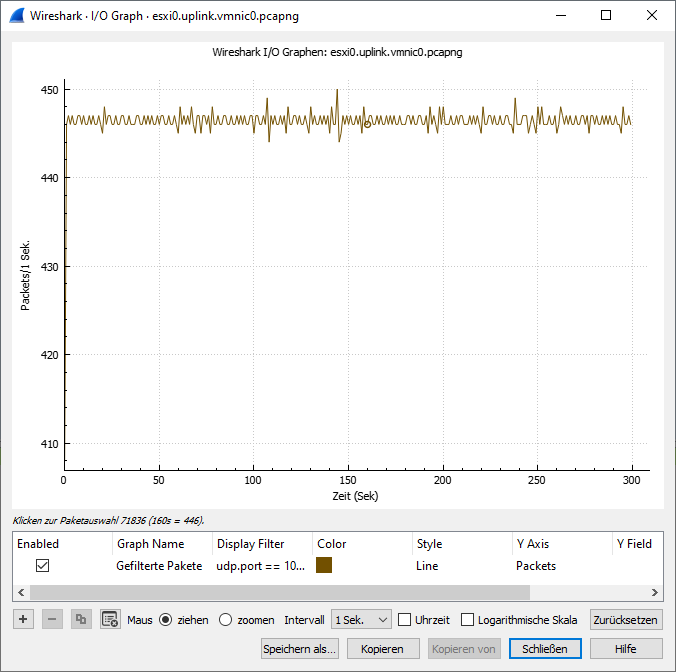

- Sender switchport (pktcap-uw): shows full transmission of UDP packets

- Sender uplink (pktcap-uw): confirms that traffic left the ESXi host without drops

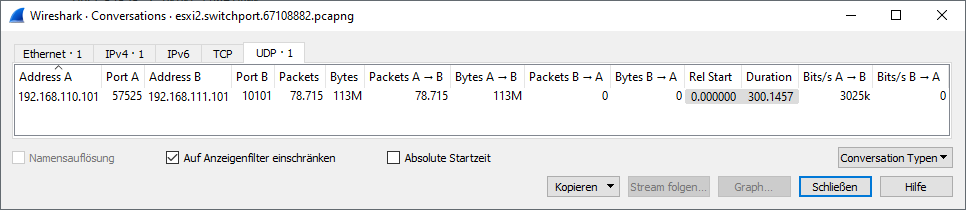

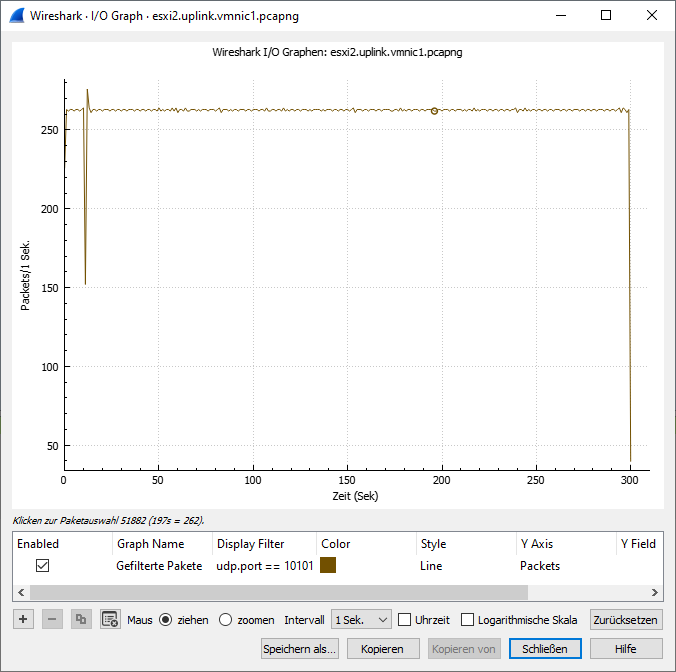

- Receiver switchport (pktcap-uw): shows reduced packet count

- Receiver iPerf3 stats: confirms packet loss

Key Findings

In this scenario, packet loss was observed between the ESXi hosts, rather than within them. The pfSense firewall, acting as the inter-VLAN router, was configured with a 3 Mbps bandwidth limiter. Once the traffic exceeded this threshold, pfSense began dropping excess packets, which is a typical behavior of network devices when queues overflow. As both VMs maintained normal CPU usage and transmitted/received packets without internal bottlenecks, it became evident that the source of the packet loss was the enforced bandwidth constraint on the network layer—not performance limitations within the VMs themselves.

Conclusion

This scenario highlights a different but very common source of UDP packet loss: congested network paths with enforced bandwidth limits. Even when the sender and receiver VMs are completely healthy, intermediate devices like firewalls or routers can become the point of failure when traffic exceeds the available capacity.

This is particularly important in environments with inter-VLAN traffic, VoIP deployments, or bandwidth-sensitive workloads. Always consider testing at multiple levels of the path when packet loss is suspected.

Final Conclusion

Throughout this five-part series, we’ve explored how various factors within and around a virtualized infrastructure can impact the reliability of UDP traffic. From CPU constraints inside virtual machines to transient link failures, routing bottlenecks, and bandwidth shaping, each scenario demonstrated a unique source of packet loss.

Using tools like iPerf3, pktcap-uw, and Wireshark, we captured and analyzed traffic at different points of the ESXi stack and across the network path, giving us full visibility into where — and why — UDP packets might be lost.

The key takeaway is clear: not all packet loss originates from the network. Factors such as VM CPU load, unstable links, and router-level bandwidth enforcement all play a role. Careful testing and multi-layer packet analysis remain essential for diagnosing and solving packet loss in modern virtual environments.

Sources and References

- Packet capture on ESXi using the pktcap-uw tool

- ESXi Network Troubleshooting Tools

- Understanding the ESXi Network IOChain

- Troubleshooting packet drops between VMs

- Large packet loss in the guest OS using VMXNET3 in ESXi

- pktcap-uw

- ESXi Network Troubleshooting with tcpdump-uw and pktcap-uw

- How to Measure Network and Disk Throughput with IPERF3

- VMXNET3 RX Ring Buffer Exhaustion and Packet Loss

- iPerf and Network Performance Testing

- How to Run a Packet Loss Test